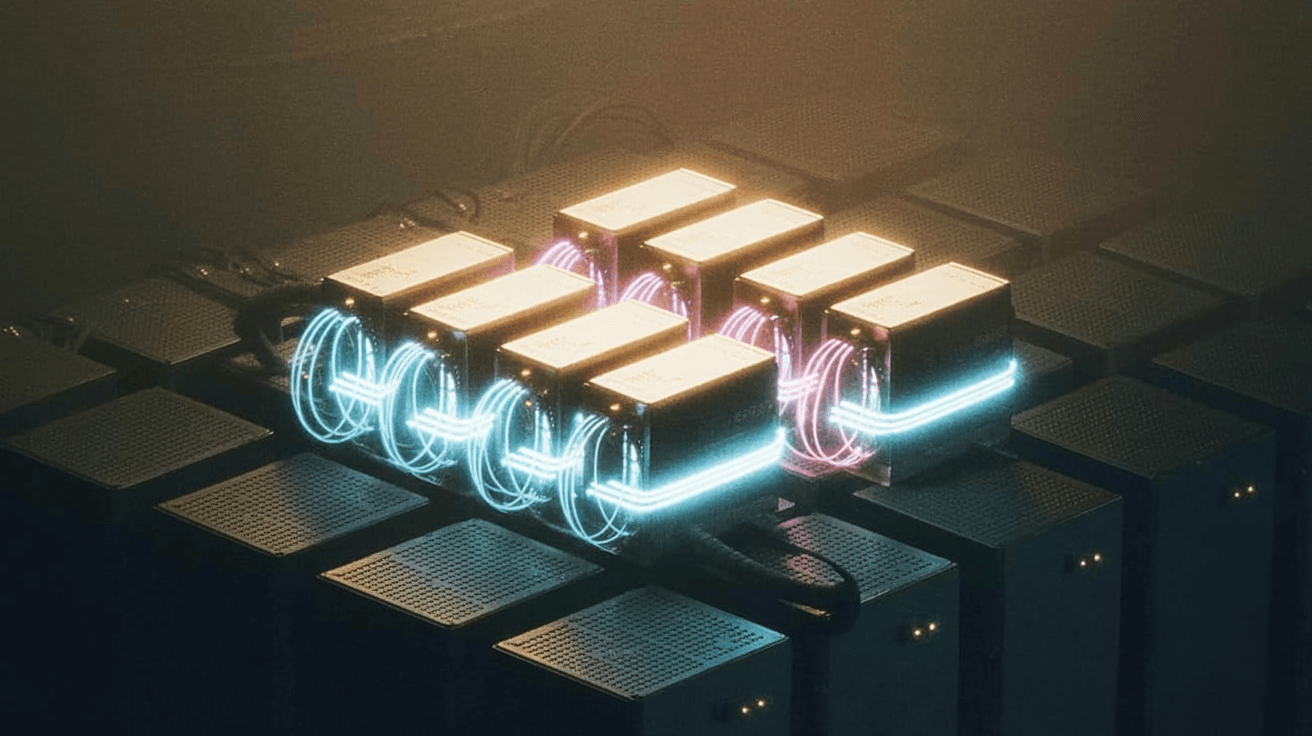

Multi-GPU Inference Scaling: When One GPU Isn’t Enough

As AI models grow in size and complexity, single-GPU setups often fall short, especially for real-time or high-volume inference tasks. Multi-GPU inference scaling is now a critical strategy for enterprises looking to maintain performance, reduce latency, and support larger models without compromising efficiency.

Model Parallelism vs Data Parallelism for Inference

Data Parallelism

Replicates the model across multiple GPUs, each processing a different subset of data simultaneously, then synchronizing results. Easy to implement and boosts throughput, but can be inefficient for very large models due to memory duplication.

Model Parallelism

Splits models across devices (by layers or tensors) so very large models can run. Adds inter-GPU communication overhead that must be managed.

Pipeline Parallelism

Partitions the model into sequential stages across GPUs and streams micro-batches through the pipeline to improve utilization. Can add stage latency if not tuned.

GPU Cluster Orchestration & Load Balancing

- Networking & Interconnects – Use NVLink in-server and RDMA/InfiniBand between servers to sustain throughput and reduce communication overhead.

- Deployment Tools – Scale with inference servers like NVIDIA Triton. Combine vertical (more GPUs per node) and horizontal (more nodes) scaling, often under Kubernetes.

- Cluster Orchestration – Production stacks commonly use Kubernetes, Run:AI, or Slurm to allocate GPUs, autoscale, and provide fault tolerance.

Real-World Benchmarks & Efficiency Gains

- Sparse DNN Optimization – With sparse kernels and multi-GPU parallelism, studies report up to 4.3× single-GPU speedups and about 10× at scale on V100/A100 GPUs.

- Tensor Parallelism Advances – Recent methods show up to 4× speedup and 3.4× throughput improvement versus earlier baselines in LLM inference.

- Cluster Deployment Performance – Apple-based clusters (for example, M2 Ultra Mac Studios running Mixture-of-Experts models) showed improved cost-efficiency and inference times, although network latency remained a limiting factor.

Best Practices for Enterprise Multi-GPU Setups

|

Strategy |

Value Delivered |

|---|---|

|

Select the Right Parallelism |

Use data parallelism for simplicity. Use model or pipeline parallelism for large models. Combine when needed. |

|

Optimize Interconnects |

Leverage NVLink, RDMA, InfiniBand for low-latency, high-bandwidth communication. |

|

Use Orchestration & Autoscaling |

Run under Kubernetes or Triton for elastic scaling and better GPU utilization. |

|

Optimize Memory & Loading |

Keep NVMe and model load pipelines from becoming bottlenecks. |

|

Implement Caching & Batching |

Batch requests and manage KV-caches to reduce latency and cost per token. |

|

Measure & Tune Continuously |

Track utilization, throughput, and latency. Tune batch sizes and scheduler settings. |

How KorBon AI Adds Value

Inference-as-a-Service

Deploy scalable multi-GPU inference without managing the cluster. We handle orchestration, autoscaling, and observability.

Consulting & Optimization

We help you choose the right parallelism strategy, size batches, and tune schedulers and interconnects for your performance and cost goals.

End-to-End AI Development

From Triton deployments to custom APIs, batching logic, caching layers, and observability dashboards, we deliver full-stack solutions that maximize throughput and efficiency.

Conclusion

As AI workloads expand, mastering multi-GPU inference scaling becomes essential for achieving performance and cost-efficiency at production scale. From parallelism strategies to orchestration, memory architecture to real-world benchmarks, each layer contributes to ROI. With KorBon AI as your partner, you gain not just the infrastructure, but optimized and dependable high-speed inference that scales with your business.